Recently I’ve been investigating using Artificial Neural Networks to solve a classification problem in my Masters work. In this post I’ll share some of what I’ve learned with a few simple examples.

An Artificial Neural Network (ANN) is a simplified emulation of one part of our brains. Specifically, they simulate the activity of neurons within the brain. Even though this technique falls under the field of Artificial Intelligence, it is so simple by itself as to be almost unrelated to any form of actual self-aware intelligence. That’s not to say it can’t be useful.

First, a quick refresher on how ANNs work.

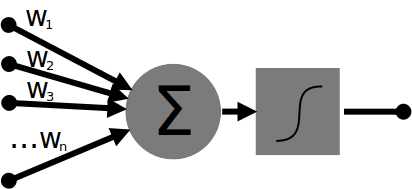

Each input value fed into a neuron is multiplied by specific weight value for that input source. The neuron then sums all of the multiplied input * weight values. This sum is then fed through an activating function (typically the Sigmoid Function) which determines the output value of the neuron, between 0 and 1 or -1 and 1. Neurons are arranged in a layered network, where the output from a given neuron is connected to one or more nodes in the next layer. This will be described in more detail with the first simple example. ANN are trained by feeding data through the network and observing the error, then adjusting the weights throughout the network based on the output error.

So why FANN? There’s certainly plenty of choices out there when it comes to creating ANNs. FANN is one of the most prevalent libraries for creating practical neural network applications. It is written in C but provides an easy-to-use C++ interface, among many others. Despite its reasonably friendly interface (the C++ interface could benefit from being more idiomatic), FANN can still be counted on to provide performance in both training and running modes. Mostly I’m using FANN because of its maturity and ubiquity, which usually results in better documentation (whether first or third party) and better long term support.

While using the latest stable version of FANN (2.2.0) for the first example in this post I ran into a bug in the C++ interface for the create_standard method of the neural_net object. This bug has persisted for about 6 years, and could have been around since the C++ interface was first introduced back in 2007. The last stable release (2.2.0) of FANN was in 2012, now over 4 years ago. There was a 5 year gap before that release too. The latest git snapshot seems to improve the ergonomics of the C++ interface a little, and includes unit tests. To install on a linux-based environment simply run the following commands (requires Git and CMake):

|

|

Another issue that might trip new FANN users up is includes and linking. FANN uses different includes to switch between different underlying neural network data types. Including fann.h or floatfann.h will cause FANN to use the float data type internally for network calculations, and should be linked with -lfann or -lfloatfann respectively. Likewise doublefann.h and -ldoublefann will cause FANN to use the double type internally. Finally, as a band-aid for environments that cannot use float, including fixedfann.h and linking with -lfixedfann will allow the excecution of neural networks using the int data type (this cannot be used for training). The header included will dictate the underlying type of fann_type. In order to use the C++ interface you must include one of the above C header files in addition to fann_cpp.h.

The most basic example for using a neural network is emulating basic boolean logic patterns. I’ll take the XOR example from FANN’s Getting Started guide and modify it slightly so that it uses FANN’s C++ interface instead of the C one. The XOR problem is an interesting one for neural networks because it is not linearly separable, and so cannot be solved with a single-layer perceptron.

The training code, train.cpp, which generates the neural network weights:

|

|

There aren’t too many changes from the original example here. I’ve defined an alias for unsigned int to save some typing, changed the const variables to constexpr, and moved the neuron counts for each layer into an array instead of passing them directly. One significant change I did make was to change the activation function from FANN::SIGMOID_SYMMETRIC to FANN::SIGMOID_STEPWISE. The symmetric function produces output between -1 and 1, while the other non-symmetric Sigmoids product an input between 0 and 1. The stepwise qualifier on the activation function I have used implies that it is an approximation of the Sigmoid function, so some accuracy is sacrificed for some gain in calculation speed. As we are dealing with discrete values at opposite ends of the scale, accuracy isn’t much of a concern. In reality for this example there is no difference between using either FANN::SIGMOID_SYMMETRIC or FANN::SIGMOID_STEPWISE, but there are applications where the activation function does affect the output. I encourage you to experiment with changing the activation function and observing the effect.

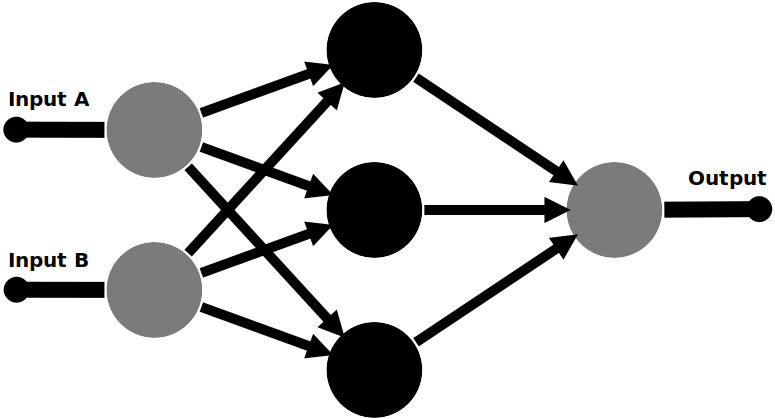

The network parameters in this training program describe a multi-layer ANN with an input layer, one hidden layer and an output layer. The input layer has two neurons, the hidden layer has three, and the output layer has one. The input and output layers obviously correspond to the desired number of inputs and outputs, but how is the number of hidden layer neurons or hidden layers calculated? Even with all of the research conducted on ANNs, this part is still largely driven by experimentation and experience. In general, most problems won’t require more than one hidden layer. The number of neurons has to be tweaked based on your problem; if you have too few you will probably see issues with poor fit or generalization, too many will mostly be a non-issue apart from driving up training and computation times.

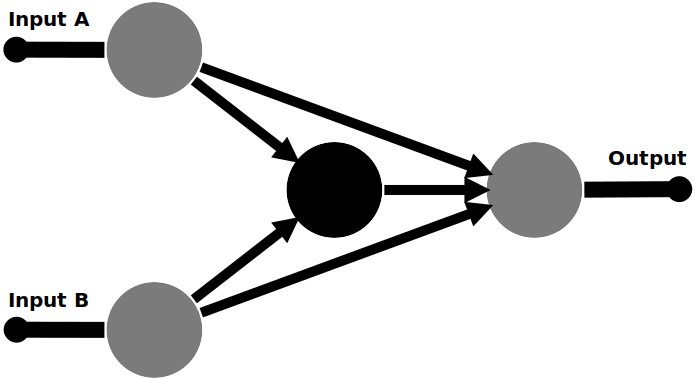

One optimization we can make to this network is to use the FANN::SHORTCUT network type instead of FANN::LAYER. In a standard multi-layer perceptron, all of the neurons in each layer are connected to all of the neurons in the next layer (see illustration above). With the SHORTCUT network type, each node in the network is connected to all nodes in all subsequent layers. In some cases (such as this one) shortcut connectivity can reduce the number of neurons required, because some layered neurons can be acting as pass-through neurons for subsequent layers. If we change the network type to FANN::SHORTCUT and reduce the number of hidden nodes to 1, the network topology becomes:

Fundamentally, this network produces exactly the same output as the layered network, but with fewer neurons.

The input data, xor.data:

|

|

Note the input data format. The first line gives the number of input/output line pairs in the file, the number of inputs to the network, and the number of outputs. Following that is the training test cases with alternating input and output lines. Values on each line are space-separated. I’ve changed the data from the original example to be based on logic levels of 0 and 1 instead of -1 and 1.

Finally, running the network with run.cpp:

|

|

The first part of the main function is just parsing the command-line arguments for input values, so the network can be tested against multiple inputs without having to recompile the program. The second part of the program has been translated into more idiomatic C++ and updated to use the new and improved C++ API from the in-development FANN version (tentatively labeled 2.3.0). The neural network is loaded from the file produced by the training program, and executed against the input.

Note that in order to run this code you will need to download and install the latest development version of FANN from the project’s Github repository.

I created a simple script to compile the code, run the training, and test the network.

|

|

Training Output:

|

|

Running Output:

|

|

The outputs aren’t exactly 1 or 0, but that’s part of the nature of Artificial Neural Networks and the Sigmoid activation function. ANNs approximate the appropriate output response based on inputs and their training. If they are over-trained they will produce extremely accurate output values for data that they were trained against, but such over-fitted networks will be completely useless for generalizing in response to input data that the network was not trained against. Generalization is a key reason for using an ANN instead of a fixed function or heuristic algorithm, so over-fitting is something we want to avoid. This property of ANN output is also useful for obtaining a measure of confidence in results produced. In this case we can threshold the outputs of the neural network at 0.5 to obtain a discrete 0 or 1 logic level output. From the results we can see that the network does indeed mimic a logical XOR function.

How about another slightly more complex example? One of my favorite small data sets to play around with is the Iris data set from the UCI Machine learning repository. I modified the code I used for the XOR example above to increase the number of inputs, outputs and hidden neurons. The data set includes 4 inputs; sepal length, sepal width, petal length and petal width. The output is the class of the iris, which I have encoded as a 1.0 output on one of three outputs. The number of hidden neurons was increased through experimentation, 10 seemed like a reasonable start points.

The training code:

|

|

The training data:

Download the training data hereAnd to run the resulting network:

|

|

The training output:

|

|

In order to prove the generalization capacity of neural networks, I took 9 random input/output pairs from the training data set and put them into a second file iris.data.test (removing them from the iris.data training file). The program in run.cpp loads this data and runs the network against it. So bear in mind the results are against data that the network has never seen in training. The training data in iris.data.test follows the same format as the training files:

|

|

The output from running the network against the test data:

|

|

In order to categorize the correctness of the output I rounded each output and compared it with the expected output for that input. Overall, the network correctly classified 9 of 9 random samples that it had never seen before. This is excellent generalization performance for a first attempt at such a problem, and with such a small training set. However, there is still room for improvement in the network; I did observe the ANN converging on a suboptimal solution a couple of times where it would only correctly classify about 30% of the input data and always produce 1.0 on the first output, no matter what the input. When I ran the best network against the training set, it would produce an misclassification rate of about 1-2% (2/141 was the lowest error rate I observed). This is a more realistic error rate than the 0% error rate of the small test data set.

Improving the convergence and error rates could be achieved by having more training data, adjusting the topography of the network (number of nodes/layers and connectivity), or changing the training parameters (such as reducing the learning rate). One facility offered by FANN which can make this process a little less experimental is cascade training which starts with a bare perceptron and gradually adds nodes to the network to improve its performance, potentially arriving at a more optimal solution.

With some experimentation I was able to remove 2 of the nodes from the network without visibly affecting its performance. Removing more nodes did increase the error slightly, and removing 5 nodes caused the network to sometimes fail completely to converge on a solution. I also experimented with reducing the desired error for training to 0.0001. This caused the network to become over-fitted: it would produce perfect results against the training data set (100% accuracy) but didn’t generalize as well for the data it hadn’t seen.

I found Artifical Neural Networks to be fairly easy to implement with FANN, and I have been very impressed by the performance obtained with minimal investment in network design. There are avenues to pursue in the future to further increase performance, but for most classification applications an error rate of 1-2% is very good.